موازی سازی(Parallelism) چیست؟

ﺷﺮﮐﺖ ﻫﺎی ﺗﻮﻟﯿﺪ ﮐﻨﻨﺪه ﭘﺮدازﺷﮕﺮ ﺑﺮای اﻓﺰاﯾﺶ ﺳﺮﻋﺖ ﭘﺮدازﻧﺪه ﻣﺠﺒﻮر ﺑﻪ ﺑﺎﻻﺑﺮدن ﻓﺮﮐﺎﻧﺲ ﭘﺮدازﺷﮕﺮ ﺑﻮدﻧﺪ. ﯾﮏ راه اﻓﺰاﯾﺶ وﻟﺘﺎژ ﻣﺼﺮﻓﯽ ﭘﺮدازﻧﺪه ﺑﻮد ﮐﻪ دارای ﻧﻘﺎط ﺿﻌﻔﯽ ﻣﺎﻧﻨﺪ اﻓﺰاﯾﺶ دﻣﺎ و اﻓﺰاﯾﺶ ﻣﺼﺮف ﺑﺎﻃﺮی ﻧﯿﺰ ﺑﻮد. از ﻃﺮﻓﯽ ﺗﻮﻟﯿﺪ ﮐﻨﻨﺪﮔﺎن ﭘﺮدازﻧﺪه ﺑﻪ ﮐﻤﮏ ﺑﺮﻧﺎﻣﻪ ﻧﻮﯾﺴﺎن ﭘﯽ ﺑﻪ ﺑﯿﮑﺎری زﯾﺎد ﭘﺮدازﺷﮕﺮﻫﺎ در زﻣﺎن ﺳﻮﯾﭻ ﮐﺮدن ﻓﺮاﯾﻨﺪ ﻫﺎ و ﻧﺦ ﻫﺎ ﺷﺪﻧﺪ ﮐﻪ ﺣﺪود ﻧﯿﻤﯽ از زﻣﺎن ﭘﺮدازش را ﺑﻪ ﻫﺪر ﻣﯽ داد. ﺑﺮاي ﺟﺒﺮان ﺣﺎﻓﻈﻪ ﮐﺶ را ﮔﺴﺘﺮش دادﻧﺪ اﻣﺎ ﺑﻪ دﻟﯿﻞ ﮔﺮان ﺑﻮدﻧﺶ ﺑﺎز دﭼﺎر ﻣﺤﺪودﯾﺖ ﺑﻮدﻧﺪ. ﺑﻨﺎﺑﺮاﯾﻦ ﭘﺮدازﻧﺪه ﻫﺎﯾﯽ ﺗﻮﻟﯿﺪ ﮐﺮدﻧﺪ ﮐﻪ ﺑﺘﻮاﻧﺪ ﭘﺮازش ﻣﻮازي را (در اﺑﺘﺪا) در دو ﻫﺴﺘﻪ ﺑﻪ اﺟﺮا ﺑﺮﺳﺎﻧﻨﺪ.

ﻧﺎم اﯾﻦ ﻫﺴﺘﻪ ﻫﺎ ﻫﺴﺘﻪ ﻫﺎی ﺳﺨﺖ اﻓﺰاری ﯾﺎ ﻓﯿﺰﯾﮑﯽ ﮔﺬاﺷﺘﻨﺪ. اﻧﺪك زﻣﺎﻧﯽ ﺑﻌﺪ ﻓﻨﺎوری ای ﺑﺮای رﺳﯿﺪن ﺑﻪ ﭘﺮدازش ﻣﻮازی اﻣﺎ در ﺳﻄﺢ ﻣﺤﺪود Hyper ﺗﺮی و ارزان ﺗﺮ ﺑﺎ ﻧﺎم اﺑﺮ ﻧﺨﯽ ﯾﺎ Hyper-Threading اراﺋﻪ ﮐﺮدﻧﺪ و ﻧﺎم آن را ﻫﺴﺘﻪ ﻫﺎی ﻣﻨﻄﻘﯽ ﯾﺎ ﻧﺦ ﻫﺎی ﺳﺨﺖ اﻓﺰاری ﮔﺬاﺷﺘﻨﺪ. ﺣﺎل ﻧﻮﺑﺖ ﺑﺮﻧﺎﻣﻪ ﻧﻮﯾﺴﺎن ﺑﻮد ﺗﺎ ﺑﺮﻧﺎﻣﻪ ﻫﺎی ﺑﺮاي اﺳﺘﻔﺎده از اﯾﻦ ﻓﻨﺎوری ﻫﺎی ﻧﻮﯾﻦ ﺑﻨﻮﯾﺴﻨﺪ. ﺑﺮﻧﺎﻣﻪ ﻧﻮﯾﺴﯽ ﻣﻮازی ﻋﻨﻮاﻧﯽ اﺳﺖ، ﮐﻪ ﻣﻮﺿﻮﻋﯽ ﮔﺴﺘﺮده در دﻧﯿﺎي ﻧﺮم اﻓﺰار اﯾﺠﺎد ﮐﺮده است.

روش موازی سازی(Parallelism(

ﺳﺎده ﺗﺮﯾﻦ ﺷﯿﻮه ﻣﻮازي ﺳﺎزي در ﻗﺎﻟﺐ Task ها ﺻﻮرت ﻣﯽ ﮔﯿﺮد، در ﻫﺮ Task ﺗﺎﺑﻊ ﯾﺎ ﻗﻄﻌﻪ ﮐﺪي ﻧﻮﺷﺘﻪ ﻣﯽ ﺷﻮد و ﺳﭙﺲ ﺑﻮﺳﯿﻠﻪ Delegate اي ﮐﻪ ﮐﺎر ﻣﺪﯾﺮﯾﺖ Task ﻫﺎ را ﺑﺮ ﻋﻬﺪه دارد اﯾﻦ Task ﻫﺎ ﺑﺼﻮرت ﻣﻮازی ﺑﺴﺘﻪ ﺑﻪ ﻫﺴﺘﻪ ﻫﺎی ﻣﻨﻄﻘﯽ در دﺳﺘﺮس اﺟﺮا ﻣﯽ ﺷﻮﻧﺪ. روش ﻫﺎی ﺑﺴﯿﺎری ﺑﺮاي ﻣﻮازی ﺳﺎزی وﺟﻮد دارد ﻣﺎﻧﻨﺪ اﺳﺘﻔﺎده از ﮐﻼس Parallel.For یا Parallel.ForEach ﮐﻪ در ﺟﺎي ﺧﻮد ﮐﺎرﺑﺮد ﻫﺎی ﻣﺨﺘﺺ ﺑﻪ ﺧﻮدﺷﺎن را دارﻧﺪ. همیشه الگورﯾﺘﻢ ﻫﺎی ﺗﺮﺗﯿﺒﯽ را ﻧﻤﯽ ﺗﻮان ﺑﻪ الگورﯾﺘﻤﯽ ﻣﻮازي ﺗﺒﺪﯾﻞ ﮐﺮد ﭼﺮا ﮐﻪ ﮐﺪ ﻫﺎی ﺗﺮﺗﯿﺒﯽ ای ﻫﺴﺘﻨﺪ ﮐﻪ اﺟﺮاي ﮐﺪ ﻫﺎي دﯾﮕﺮ ﻧﯿﺎز ﺑﻪ ﺗﮑﻤﯿﻞ ﺷﺪن آن ﻫﺎ دارد. ﺑﺴﺘﻪ ﺑﻪ الگورﯾﺘﻢ درﺻﺪی از آن را ﻣﯽ ﺗﻮان ﻣﻮازی ﮐﺮد. ﻗﺒﻞ از ﻣﻮازی ﺳﺎزی ﺑﺎﯾﺪ ﻣﻮازی ﺳﺎزی را در ذﻫﻨﺘﺎن ﻃﺮاﺣﯽ ﮐﻨﯿﺪ.

Parallel Programming یا برنامه نویسی موازی یعنی تقسیم یک مسئله به مسائل کوچکتر و سپردن آن ها به واحد های جداگانه برای پردازش کردن.این مسائل کوچک به صورت همزمان شروع به اجرا می کنند. Parallel Programming وظیفه یا Task را به اجزا مختلفی تقسیم می کند.

فرم های مختلفی از Parallel وجود دارد .مانند bit-level ، instruction-level، data ، taskدر این آموزش راجع به Data Parallelism و Task Parallelism بحث خواهیم کرد.

تصور کنید هسته CPUمتشکل از چندین ریزپردازنده است که همه این ها به حافظه اصلی دسترسی دارند.هر کدام از این ریزپردازنده ها قسمتی از مسئله را حل می کنند.

Data Parallelism

این مورد بر روی توزیع دیتا در نقاط مختلف تمرکز می کند.یعنی داده را به بخش های مختلفی می شکند.هر کدام از این بخش ها به Thread جداگانه ای برای پردازش داده می شود.

Task Parallelism

این مفهوم وظایف یا Taskها را به بخش هایی شکسته و هر کدام را به یک Thread جهت پردازش می دهد.

در پروژه ای که به صورت ضمیمه این مقاله می باشد (در پروژه DataParallisem)به سه صورت مختلف وظیفه یا Task تعریف شده است.

1-به صورت Function

2- به صورت Delegate–

3-به صورت لامبدا

کد این قسمت به صورت زیر می باشد

using System;

using System.Collections.Generic;

using System.Linq;

using System.Text;

using System.Threading.Tasks;

namespace TPL_part_1_creating_simple_tasks

{

class Program

{

static void Main(string[] args)

{

//Action delegate

Task task1 = new Task(new Action(HelloConsole));

//anonymous function

Task task2 = new Task(delegate

{

HelloConsole();

});

//lambda expression

Task task3 = new Task(() = > HelloConsole());

task1.Start();

task2.Start();

task3.Start();

Console.WriteLine("Main method complete. Press any key to finish.");

Console.ReadKey();

}

static void HelloConsole()

{

Console.WriteLine("Hello Task");

}

}

}

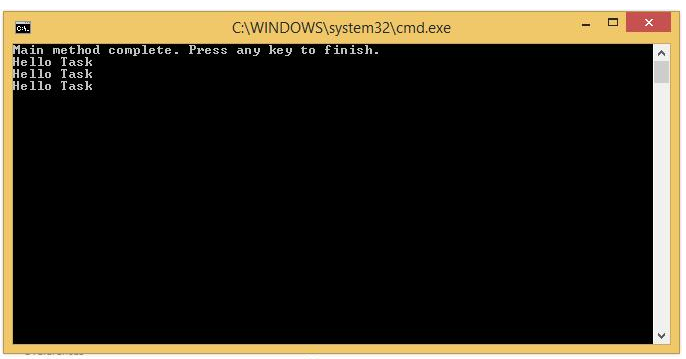

بعد از اجرا، هر کدام از Task ها اجرا شده البته به صورت همزمان و کارهای محوله به آنها را انجام میدهند.

در این آموزش بر روی مفهوم Data Parallelism تمرکز خواهیم کرد.توسط Data Parallelism عملیات یکسانی بر روی المانهای یک مجموعه یا آرایه به صورت همزمان انجام خواهد شد. که در فضای نام System.Threading.Tasks.Parallel قرار دارد. روش اصلی برای انجام Data Parallelismنوشتن یک تابع است که یک حلقه ساده بدون Thread دارد .

public static void DataOperationWithForeachLoop()

{

var mySource = Enumerable.Range(0, 1000).ToList();

foreach (var item in mySource)

{

Console.WriteLine("Square root of {0} is {1}", item, item * item);

}

}

خروجی برنامه را در زیر می بینید

عملیات Data Parallelism را می توان با یک حلقه foreach موازی هم انجام داد.

public static void DataOperationWithDataParallelism()

{

var mySource = Enumerable.Range(0, 1000).ToList();

Parallel.ForEach(mySource, values = > CalculateMyOperation(values));

}

public static void CalculateMyOperation(int values)

{

Console.WriteLine("Square root of {0} is {1}", values, values * values);

}

بعد از اجرا شکل زیر را خواهید دید.

در این کد در داخل حلقه Foreach یک تابع Delegate قرار دادیم در این تابع به ازای هر تکرار حلقه بر روی مجموعه تابعی که درون Delegate فراخوانی کرده ایم اجرا خواهد شد.

Data Parallelism توسط PLINQ

PLINQ به معنای Parallel LINQ است .این نسخه از لینک جهت پیاده سازی لینک بر روی پردازنده های چند هسته ای نوشته شده است.

توسط لینک می توان اطلاعات را از چندین منبع بازیابی کرد.و در نهایت این نتایج با هم ترکیب می شوند تا نتیجه نهایی Query به دست آید.اما اگر از PLINQاستفاده کنیم این دستورات به جای اینکه پشت سر هم اجرا شوند به صورت موازی اجرا می شوند.

برای این که از PLINQ استفاده کرد فقط کافی است که در انتهای عبارت لینک از AsParallel استفاده کنیم.به کد زیر توجه کنید

public static void DataOperationByPLINQ()

{

long mySum = Enumerable.Range(1, 10000).AsParallel().Sum();

Console.WriteLine("Total: {0}", mySum);

}

بعد از اجرا شکل زیر را خواهید دید

برای به دست آوردن اعداد فرد در این مجموعه توسط Plinq از کد زیر استفاده می کنیم

public static void ShowEvenNumbersByPLINQ()

{

var numers = Enumerable.Range(1, 10000);

var evenNums = from number in numers.AsParallel()

where number % 2 == 0

select number;

Console.WriteLine("Even Counts :{0} :", evenNums.Count());

}

پس از اجرا شکل زیر را خواهید دید

توسط متد Parallel.Invoke() می توانید چندین متد را مانند شکل زیر به صورت همزمان اجرا کنید.

Parallel.Invoke( () = > Method1(mycollection), () = > Method2(myCollection1, MyCollection2), () = > Method3(mycollection));

MaxDegreeOfParallelism

ماکزیمم تعداد پردازش های موازی را مشخص می کند در کد زیر و در داخل Foreach در پارامتر دوم ماکزیمم تعداد پردازش های موازی مشخص شده اشت.

loopState.Break()

توسط این کد به Thread هایی که پردازش آنها طول کشیده اجازه می دهیم که بعدا Break شوند.به کد زیر توجه کنید.

var mySource = Enumerable.Range(0, 1000).ToList();

int data = 0;

Parallel.ForEach(

mySource,

(i, state) = >

{

data += i;

if (data > 100)

{

state.Break();

Console.WriteLine("Break called iteration {0}. data = {1} ", i, data);

}

});

Console.WriteLine("Break called data = {0} ", data);

Console.ReadKey();

منبع

فایل ضمیمه این آموزش

TPL_part_1_creating_simple_tasks

رمز فایل: behsanandish.com

دانلود کتاب آموزش برنامه نویسی موازی با #C

Parallel Programming In Csharp

رمز فایل: behsanandish.com